Automation Meets Reality:

Keeping Humans In The Loop

An AP clerk notices discrepancies on nearly every line of a 200-line invoice. On closer inspection, it turns out the discrepancies are just rounding errors. By the 10th invoice with the same issue, frustration builds as the tedious reviews slow down their already packed queue.

PROBLEM OVERVIEW

When automation falls short, users pay the price

While OpenEnvoy’s automation effectively streamlined invoice processing, unresolved edge cases were slowing users down. Without a way to address these issues directly in the platform, users were forced to process invoices manually in their ERP, disrupting workflows, generating support tickets, and delaying user acceptance testing (UAT).

I led the design strategy to address the two most critical use cases first:

Rounding discrepancies: Affecting 15–20% of invoices for some customers, these small differences would trigger exceptions that required tedious manual review.

Incomplete purchase order matching: AI-matching narrowed choices to a few line items, but couldn’t confidently complete the match, resulting in high rates of exceptions.

CONSTRAINTS & CONSIDERATIONS

Design fast, but plan for scale

AI limitations vs. human intuition: Tasks that seem simple to a human—like recognizing rounding—are surprisingly difficult for AI. The solution needed to provide simple pathways for users to resolve what automation couldn’t.

Future-proofing for scalability: The broader business goal was to continue expanding user actions over time, so the design approach had to be modular and extendable.

Tight turnaround: Onboarding delays were a critical business concern. Speed-to-launch was prioritized, with enhancements like bulk actions scoped for future releases.

SOLUTION

Give users a (half) steering wheel

I designed a solution focused on empowering users to resolve exceptions directly—allowing them to close the final 5% of automation gaps. Internally, this became known as the “half-steering wheel” approach: automation drives the process, but users have the tools to take control when needed.

My solution focused on three key areas:

Centralized issue detection: There was an existing framework that surfaced exceptions to the user, so it made sense to extend that framework to support these use cases.

Relevant data surfaced contextually: Display all necessary information for users to quickly understand and resolve the issue—no digging required.

Scoped for speed, designed for scale: I prioritized delivering essential functionality first, with future plans to streamline bulk actions and introduce rule-based configurations to handle exceptions.

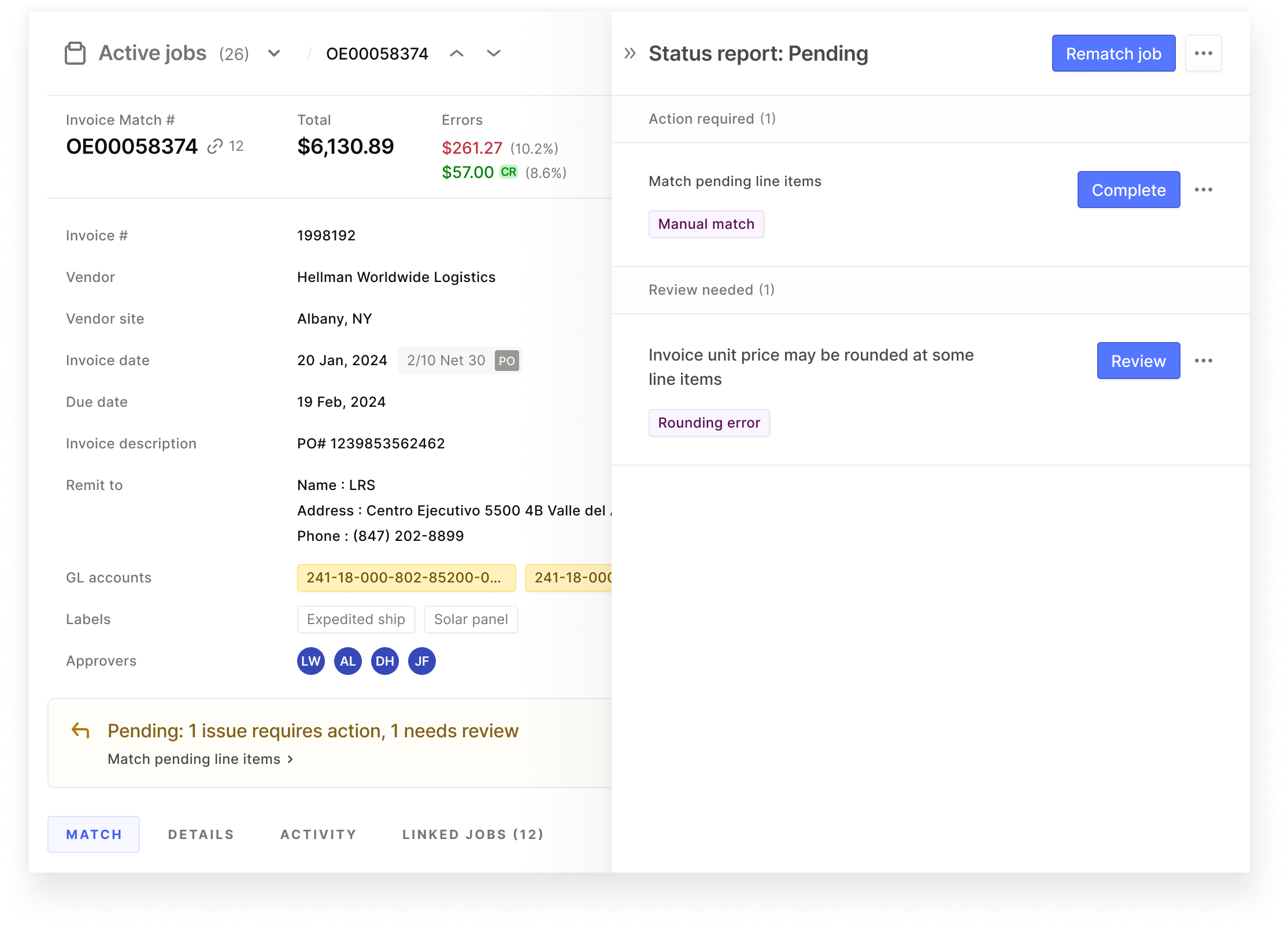

Image 1, Status Report - Surface Exceptions Proactively: I extended OpenEnvoy’s existing status report framework to flag rounding and manual PO match exceptions. Leveraging the existing system accelerated development and provided streamlined entry points to resolve the issues within a familiar UX.

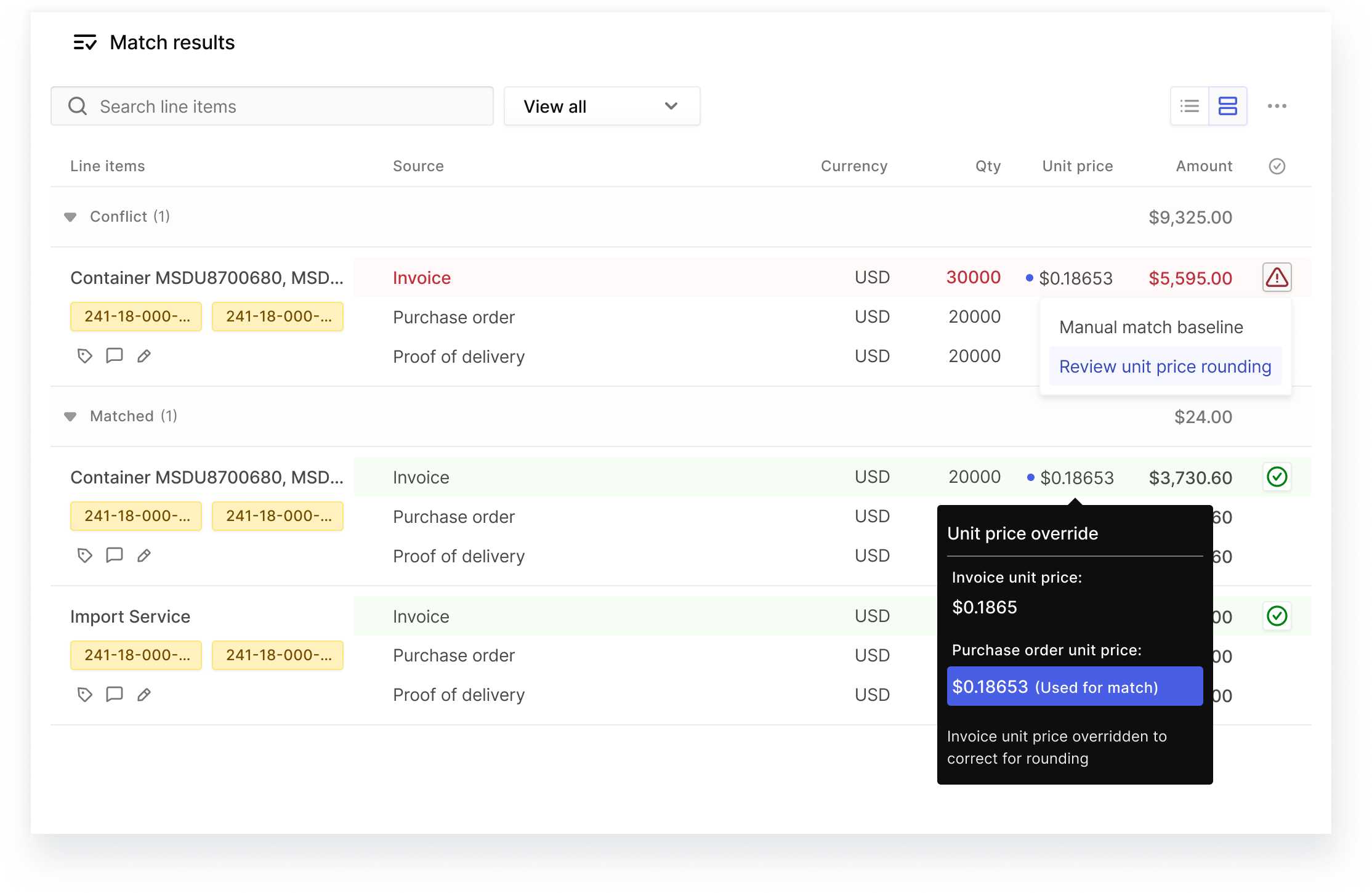

Image 2, Contextual Actions - Resolve Issues on Inspection: To enhance discoverability, I introduced contextual actions within the match results. If users noticed exceptions during their review, they could easily launch the relevant sidebar to resolve issues without disrupting their workflow.

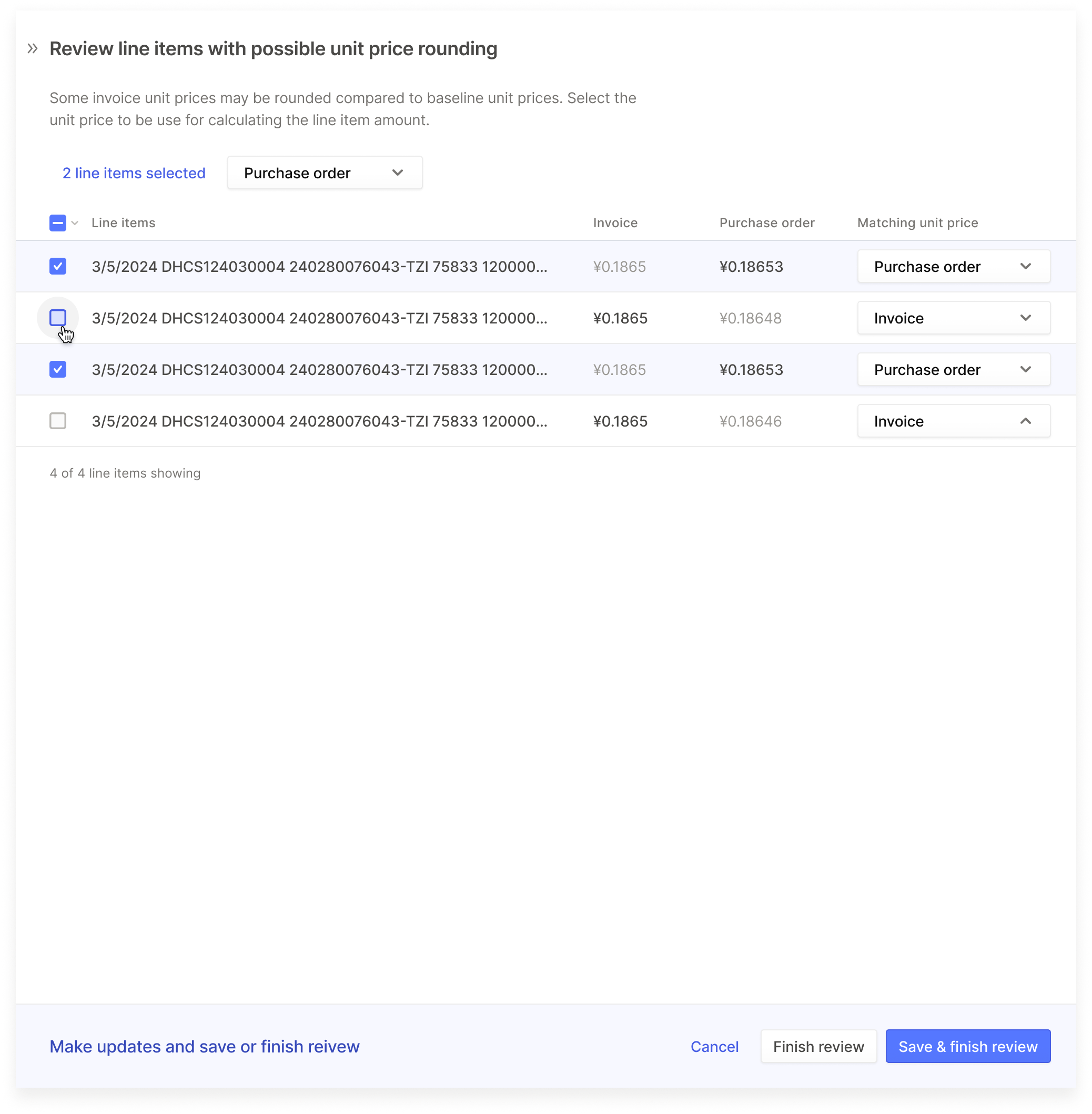

Image 3, Resolving Unit Price Rounding: I designed a logic to detect potential rounding discrepancies by comparing invoice and purchase order unit prices. If values fell within a defined tolerance, they were aggregated in this list. The streamlined list allowed users to quickly compare and override invoice unit prices to resolve the rounding issues.

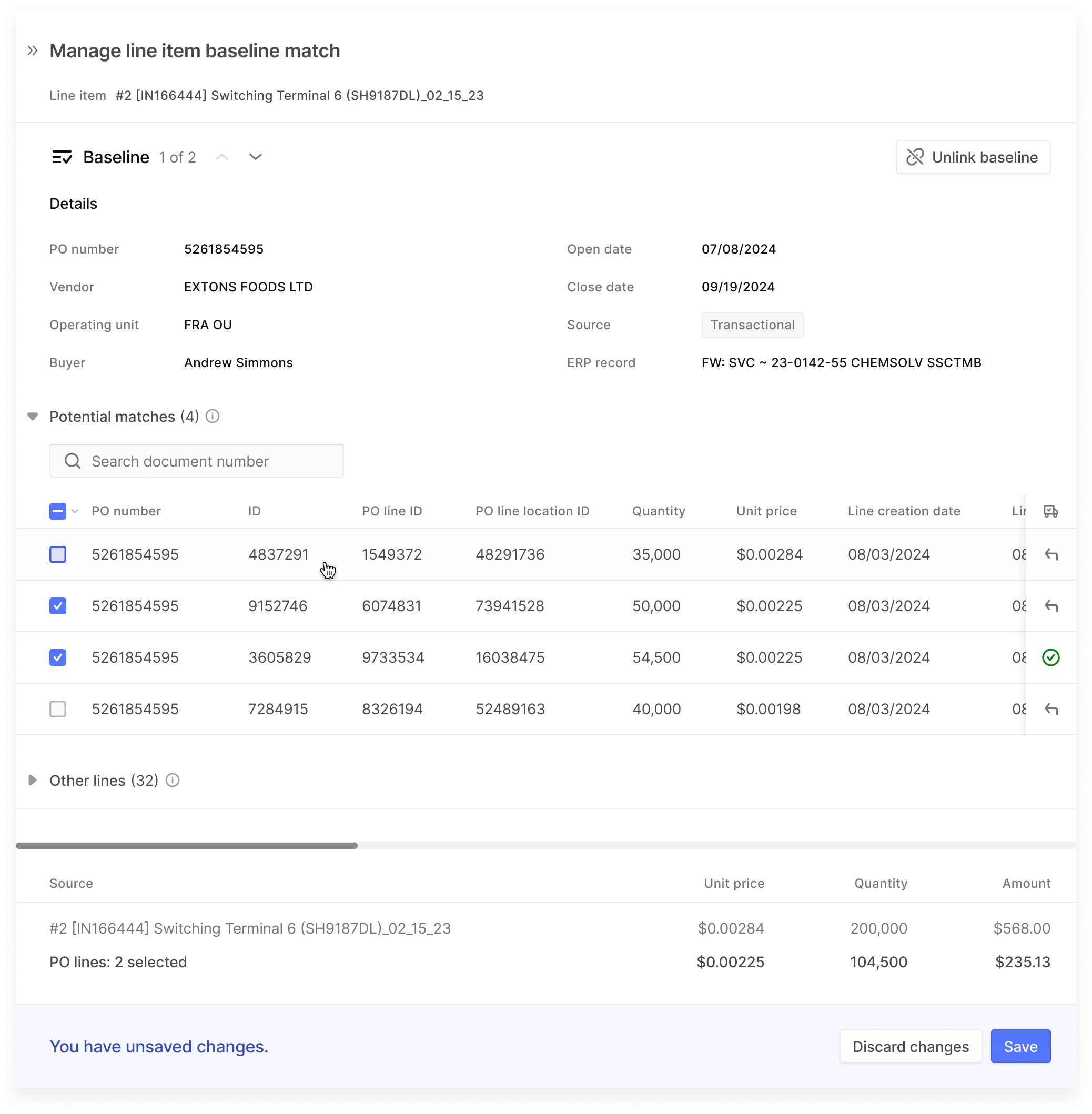

Image 4, Manual Purchase Order Matching: For unmatched purchase order lines, I designed a streamlined sidebar that surfaced potential purchase order matches flagged by the system. Users could manually finalize matches by selecting the appropriate PO lines. This idea would be extendable to other documents in the future.

Image 5, Post-Update Feedback - Clear, Editable Results: The match results reflected all updates. Users retained full control, with the ability to re-edit any line item for corrections or adjustments—ensuring control remained post-resolution.

MEASURING IMPACT

Fewer exceptions, smoother workflows

This initiative empowered users to resolve issues independently, reduced manual interventions, and kept their workflows within OpenEnvoy. Success was measured across three outcomes:

Fewer support tickets related to rounding discrepancies.

Decreased manual processing outside OpenEnvoy, measured by a reduction of deleted jobs.

Streamlined UAT and onboarding by giving users the tools to resolve issues.

KEY LEARNINGS

Humans aren’t getting replaced by AI (at least in finance)

Automation isn’t a silver bullet—users need control. Especially in high-stakes applications like finance, users need tools to resolve issues independently and correctly, when automation inevitably fails.

Solve first, optimize later. While this was a recurring learning at OpenEnvoy, this project reinforced that users can still get massive value without a perfect solution.

Design for growth from the start. Creating scalable patterns early streamlined both design and implementation as user needs expanded

This project reinforced a major learning from working on complex systems that leverage AI: automation can streamline processes, but users should always have the final say. When things go wrong, giving users simple tools to resolve issues keeps workflows moving—and builds trust in the product.